Launching Earthling Messages into Orbit for Eddie Vedder

In Support of Earthling

Space is one of those topics that keeps coming up in my work. From the Khruangbin Shelter In Space playlist generator, to the David Bowie Moon Unlocker, all the way back to 2017’s Celestial Navigator for Foo Fighters. It is a topic that both inspires and confuses the hell out of me.

When I was writing ideas for Bowie, I arrived at two potential directions: the Moon Unlocker (which we developed) and something I was calling “Message in a Satellite.” Since we were celebrating the 50th anniversary of “Space Oddity” at the time, I thought it would be interesting to allow fans to write or record a short message to Bowie. That message would then be virtually launched into space and begin a unique orbit around the earth. Users could then use a custom built Web AR app to go outside and hear the messages currently orbiting over their current location. It’s your classic “message in a bottle” mechanic but the bottle is a satellite and the ocean is, well, space.

As I’ve said many times before on this dev blog, I am very patient when it comes to finding the right home for the right concept. In reality, this idea originated back in 2017 when I was plotting the sun and moon in the sky while testing orientation on the Celestial Navigator for Foo Fighters. I actually pitched it to Nick Jonas last year for his Spaceman release but it just wasn’t quite right at that moment. So, you could imagine my excitement last month when Republic Records was asking for ideas for a new Eddie Vedder solo record called Earthling. I pitched the concept alongside a bunch of other incredible ideas which the marketing team there had amassed. We agreed this particular concept would fit nicely with the themes Eddie was tackling on his first solo album in over a decade.

Eddie Vedder, Pearl Jam, and the grunge / alternative music scene were a huge part of my teenage years. I feel like I’ve knocked out most of the other big ones, having had the opportunity to build apps for Smashing Pumpkins, R.E.M., Chris Cornell, Sonic Youth, and Foo Fighters. I feel a certain level of preciousness when working for those artists that inspired me to work in this industry in the first place. Eddie is no exception.

After three weeks of crunching celestial math, we launched the Earthling Contact experience and saw fans launch hundreds of messages that night. Initially, we were concerned about unsavory messages but we were delighted to hear messages conveying kindness, hope, and a love for Eddie’s music. Make contact today and read on to find out how it came together.

Can you hear?

Are we clear?

Cleared for lift off

Takeoff

Making Contact

The app has two core user interactions: recording new messages and exploring existing ones. Let’s first discuss how we handled the web powered audio recording experience.

Get Microphone Access

To begin with, we’ll need access to the user’s microphone. As I’ve covered on many dev blogs before, we can do this by simply using getUserMedia.

// Get microphone

navigator.mediaDevices.getUserMedia({

audio: true,

video: false

})

.then(stream => {

// Use stream

})

.catch(error => {

// Handle error

})Before we talk about recording, let’s quickly discuss how I’m visualizing the user’s microphone stream.

Visualize using Web Audio API

I’ve decided to visualize the incoming audio stream as an audio spectrum. In order to do this, we’ll first need some real time audio data. We can get this by setting up a Web Audio API AnalyserNode and connecting to the microphone stream we gained access to above.

// Create new audio context

let context = new AudioContext()// Initialize stream source for microphone

let microphone = context.createMediaStreamSource(stream)// Initialize analyzer

let analyser = context.createAnalyser()// Set FFT size

analyser.fftSize = 32// Get buffer length

let bufferLength = analyser.frequencyBinCount// Initialize time array

let timeArray = new Uint8Array(bufferLength)// Connect microphone to analyser

microphone.connect(analyser)

Then, in an animation loop, we can continually get the analysis data using requestAnimationFrame.

const analyze = () => {

// Get data

analyser.getByteTimeDomainData(timeArray) // Request animation frame

requestAnimationFrame(analyze)

}

From here you can use whatever visualization solution you’d like. I chose to use the Paper.js vector graphics library to take the levels of the analysis and create a dynamic line. I discuss this a bit in the A Quiet Place dev blog but on this project, I evolved that solution into a Vue component. Here’s a gist of that component. I can then import it into my recording page and include it, passing the microphone stream as a prop.

<AudioSpectrum :stream="stream"></AudioSpectrum>Now then, let’s talk about actually recording that audio stream.

Recording Audio With MediaRecorder

Oh, what a time to be alive, now that the MediaRecorder API is supported by most modern browsers. We can now record audio, video, and even canvas elements. Let’s first initialize a recorder and pass it our microphone stream. We’ll also declare an array which will contain all of the recorder chunks. As audio data is available, we’ll push it into the array. Finally, we’ll listen for the recording to stop and use that moment to create a blob from the recorded data.

// Initialize chunks

let chunks = []// Initialize recorder

let recorder = new MediaRecorder(stream)// Recorder data available

recorder.ondataavailable = (e) => {

// Push chunks

chunks.push(e.data)

})// Recorder stopped

recorder.onstop = (e) => {

// Initialize blob

let blob = new Blob(chunks, {

type: recorder.mimeType

})

}

We can then start the recorder, telling it to send a chunk of data every second.

recorder.start(1000)Since we decided on a max recording length of 15 seconds, I used a setTimeout function to make sure recording stopped after 15 seconds. Regardless of your implementation, you’ll stop recording by calling the stop method.

recorder.stop()Now this works pretty well across many browsers but there is one issue: all of these browsers record audio in different formats. Wouldn’t it be nice if everything existed as a nicely compressed and compatible MP3 file? In order to handle this, we’ll bring in a file processor.

Convert to MP3 and Store on S3

I’ve used Transloadit on many client and software apps to handle file uploads. In the case of this project, we’ll want to use it to take that recorded audio blob, convert it to an MP3, and then store it in an S3 bucket.

This all begins by creating a template on Transloadit that explains how the file will be handled. In addition to specifying that any audio received should be encoded to an MP3, we’ll also say that the encoded files should be stored on an AWS S3 bucket we provisioned. Transloadit has this great Template Credentials feature which allows you to securely specify the AWS credentials and bucket you’re using.

{

"steps": {

":original": {

"robot": "/upload/handle"

},

"mp3": {

"use": ":original",

"robot": "/audio/encode",

"result": true,

"ffmpeg_stack": "v4.3.1",

"preset": "mp3"

},

"exported": {

"use": "mp3",

"robot": "/s3/store",

"credentials": "EARTHLING_CREDENTIALS"

}

}

}To integrate this into our web app, we’ll use Transloadit’s incredible open-source file uploader project, Uppy. During configuration, we can tell Uppy we wish to use a specific Transloadit template to handle files. You can even dynamically adjust the bucket name depending on your current environment.

// Initialize Uppy

let uppy = new Uppy()// Setup Transloadit

uppy.use(Uppy.Transloadit, {

waitForEncoding: true,

params: {

auth: {

key: TRANSLOADIT_KEY

},

template_id: TRANSLOADIT_TEMPLATE_ID,

steps: {

exported: {

bucket: S3_BUCKET

}

}

}

})

Then, when you’re ready, add the file, upload it, and wait for a result from Transloadit. This should include the url of the file stored on your S3 bucket.

// Add blob to Uppy

uppy.addFile({

name: ‘recording.mp3’,

type: blob.type,

data: blob,

source: ‘Local’,

isRemote: false

})// Upload to Uppy

uppy.upload().then(result => {

// Handle result

})

That recording url, along with some orbiting parameters, are stored in a DynamoDB.

Orbital Mechanics

I studied so many ways to manage orbiting in our app, including defining a fake TLE for each user satellite. However, when I came across Jake Low’s excellent Satellite ground track visualizer on Observable, I realized I could simplify things. You can thank Johannes Kepler for the laws of planetary motion and we’ll use some of the six Keplerian elements to establish our satellite orbits. The two parameters we are most interested in are the Inclination (i) and Longitude of the ascending node (Ω). Inclination will define the tilt of our orbit and the ascending node will define the swivel. As part of our experience, users will be able to customize both of these elements. But first, we’ll need some physics parameters.

Physics Parameters

I borrowed the physics parameters from Jake’s example and added them all to a Vuex store because I knew I would be using them often in many components of the app. First, a state was established which included the earth’s angular velocity, mass, and radius. In addition, I stored the value for gravity and the intended altitude for our satellites.

export const state = () => ({

earthMass: 5.9721986e24, // kilograms

earthRadius: 6371000, // meters

g: 6.67191e-11, // gravity

satelliteAltitude: 834000 // km

})Using the state data, I then established several getters which computed the orbit radius, as well at the satellite’s velocity and angular velocity.

export const getters = {

orbitRadius(state) {

return (state.earthRadius + state.satelliteAltitude) / state.earthRadius

},

satelliteAngularVelocity(state, getters) {

return getters.satelliteVelocity / (state.earthRadius + state.satelliteAltitude)

},

satelliteVelocity(state) {

return Math.sqrt(state.g * state.earthMass / (state.earthRadius + state.satelliteAltitude))

}

}With these parameters established, we could now visualize an orbit using Three.js.

Orbit Visualization with Three

Working in a 3D environment like Three.js, makes working with circular orbits pretty simple — once you wrap your head around the basics. We can simulate a satellite orbit with only three elements: the celestial body as a sphere, the inclination tilt as a group, and the ascending node swivel as a group. First, we’ll create the celestial body and place it at the orbit radius we established in our store.

// Initialize celestial body geometry

const celestialBodyGeometry = new THREE.SphereGeometry(1, 10, 10)// Initialize celestial body material

const celestialBodyMaterial = new THREE.MeshBasicMaterial({

color: 0x000000

})// Initialize celestial body

const celestialBody = new THREE.Mesh(celestialBodyGeometry, celestialBodyMaterial)// Scale celestial body

celestialBody.scale.setScalar(0.025)// Position celestial body

celestialBody.position.x = orbitRadius

Then we’ll create a new group, rotate it according to our inclination, and then add the celestial body to it. That way, when the orbit tilts based on inclination, the satellite will tilt also.

// Initialize tilt

const tilt = new THREE.Group()// Set orbit rotation from orbital inclination

tilt.rotation.x = THREE.MathUtils.degToRad(orbitTilt)// Add celestial body to tilt

tilt.add(celestialBody)

Next we’ll create another group, rotate it according to the longitude of the ascending node, and then add our tilt group to it. This allows the entire orbit to swivel. There’s probably a better way to handle these various rotations rather than nesting elements but this works fine in a pinch.

// Initialize swivel

const swivel = new THREE.Group()// Set swivel rotation from longitude of the ascending node

swivel.rotation.y = THREE.MathUtils.degToRad(orbitSwivel)// Add tilt to swivel

swivel.add(tilt)// Add swivel to scene

scene.add(swivel)

With these elements now added to our scene, we can rotate the tilt along its y axis to visualize the satellite’s rotation. The rotation itself is based on the satellite’s angular velocity we established in our store. Similar to Jake’s example, I’m using a time scale variable to help simulate the orbit at different speeds.

// Initialize last updates time

let lastUpdated = performance.now()// Render

let render = async () => {

// Request animation frame

requestAnimationFrame(render) // Tick animation

await new Promise((resolve) => setTimeout(resolve, 1000 / 60)) // Get current time

let currentTime = performance.now() // Calculate simulated time delta

let simulatedTimeDelta = (currentTime - lastUpdated) * timeScale / 1000 // Simulate satellite rotation

tilt.rotation.y += this.satelliteAngularVelocity * simulatedTimeDelta // Update last updated time

lastUpdated = currentTime // Render scene

renderer.render(scene, camera)}

And that’s the basics of visualizing orbiting. I realized I left a lot out of Three.js setup in the explanation above, including the earth itself. If you’d like to see the full example, just head over to the CodePen. In addition to showing you how to add and rotate the earth, I’ve included an evolution that allows you to adjust the mean anomaly.

Launch Sequence

Initially I thought satellites should begin their orbit precisely above a user’s location but that’s not really how launches work. In reality, a satellite is launched and then maneuvers into its final orbit. Also, given the rotation of the earth, most orbits wouldn’t return to the exact same location often. So, while our launch does take place from the user’s location, the orbit itself begins wherever they defined it. It was either that or I would really have to do some rocket science.

Get Location

That being said, it would be nice to have the launch appear like it was happening from the user’s location but I’d rather not ask for access to their GPS to do so. Instead, I opted to use MaxMind’s excellent GeoIP service to convert a user’s IP address into a pair of coordinates. If for some reason we could not locate the user, I simply fell back to the coordinates of Seattle. Here’s how easy it is to use GeoIP.

// Get coordinates from MaxMind

geoip2.city(data => {

// data.location.latitude

// data.location.longitude

})To place our satellite on our earth sphere using these coordinates, we need to convert the coordinates to a Vector3 position. I wrote a little helper method for this.

let coordinatesToVector = (radius, latitude, longitude) => {

return new THREE.Vector3().setFromSpherical(

new THREE.Spherical(

radius,

THREE.MathUtils.degToRad(90 - latitude),

THREE.MathUtils.degToRad(90 + longitude)

)

)

}Just copy the new position on the satellite.

// Get satellite position

let satellitePosition = coordinatesToVector(1, coords[0], coords[1])// Position satellite

satellite.position.copy(satellitePosition)

Now, let’s simulate a launch sequence.

Launching

I experimented with a few different launch visuals but nothing beats a straight-down shot of the satellite leaving earth. In order to do this, we can place the Three.js camera slightly above the satellite and make sure it is always looking at the satellite. To place it, we can use the same coordinatesToVector method at a slightly higher radius.

// Get camera position

let cameraPosition = coordinatesToVector(1.5, coords[0], coords[1])// Position camera

camera.position.copy(cameraPosition)

Then, to keep looking, we’ll use the lookAt method in our render loop.

// Look at satellite

camera.lookAt(satellite.position)We can then use GreenSock to tween our satellite into the atmosphere and make sure the camera stays ahead of it also. First, establish new final positions for both the satellite and camera using the coordinatesToVector method again. Then, create a pair of GSAP tweens for both the camera and satellite.

// Animate camera to final position

let cameraTween = gsap.to(camera.position, {

delay: 9,

duration: 17,

ease: “power1.inOut”,

x: finalCameraPosition.x,

y: finalCameraPosition.y,

z: finalCameraPosition.z,

onUpdate: () => {

// Update ratio journey

journey = cameraTween.ratio }

})// Animate satellite to final position

let satelliteTween = gsap.to(satellite.position, {

delay: 9,

duration: 17,

ease: “power1.inOut”,

x: finalSatellitePosition.x,

y: finalSatellitePosition.y,

z: finalSatellitePosition.z

})

Couple of things. I’m delaying both tweens so they coincide with an audio clip from the new record. The cameraTween also keeps track of the current ratio of animation in a journey variable. I’m using this variable to power some altitude indicators on the UI, based on the final satellite altitude we set up earlier in the store.

Math.round(journey * satelliteAltitude).toString().padStart(6, ‘0’)And that’s pretty much it. Once the satellite reaches space, it maneuvers to orbit and we redirect the user to that satellite’s page.

Orbit Camera

In an attempt to maximize sharing, each satellite gets a unique page which shows where it is currently orbiting over the earth. In a lot of ways, this page is very similar to the launch visual, except the camera is chasing the satellite. So, instead of a straight line, we’ll use the orbit visualization logic from earlier to animate our satellite. Now, let’s position our camera.

Chase Camera

The camera will be added to the same tilt group as the satellite but placed at a slightly higher altitude and slightly behind. In order to calculate a viable point, I used the absarc method of THREE.Path() to get a circle of points on a slightly higher radius than the satellite orbit. I then picked the point that looked best. The camera then looks at the satellite once again and is rotated for a view over the curvature of the earth.

// Initialize camera points

let cameraPoints = new THREE.Path().absarc(0, 0, 1.5, 0, Math.PI * 2).getPoints(10)// Reposition camera

camera.position.x = cameraPoints[1].x

camera.position.y = cameraPoints[1].y// Look at satellite

camera.lookAt(satellite.position)// Rotate camera

camera.rotation.z = THREE.MathUtils.degToRad(-90)

And that’s pretty much it. As the tilt is rotated, the camera rotates with it and stays focused on the satellite. Similar to the altitude indicator of the launch sequence, I chose to include coordinates indicators to help illustrate that the satellite is actually moving. First, we’ll need the satellite’s current spherical coordinates.

let satellitePosition = satellite.getWorldPosition(new THREE.Vector3())Then, I have another helper method named coordinatesFromVector which can get coordinates from vectors.

let coordinatesFromVector = (radius, vector) => {

let lat = 90 - THREE.MathUtils.degToRad(Math.acos(vector.y / radius))

let lon = ((270 + THREE.MathUtils.degToRad(Math.atan2(vector.x, vector.z)) % 360) - 180return [lon, lat]

}

We’ll call this method to get the coordinates and then share them with the UI.

let coords = coordinatesFromVector(orbitRadius, vector)Now that we’re through satellite creation, we can discuss how users may explore the satellites in augmented reality.

Augmented Reality Exploration

A core piece of this concept is allowing users to explore all of the satellites currently orbiting overhead via a Web AR experience. Once all the satellite orbits are visualized as outlined earlier, this should be as simple as placing the 3D camera in the center of the scene and allowing users to control the rotation of it with their mobile device. In reality, this is going to take permission to access a few internal functions and then configure them to be part of the 3D scene.

Gyroscope

Let’s start with the most important: the gyroscope. Without it, the user wouldn’t be able to have that true AR experience of pointing their device around the sky to browse satellites. Following the blog I wrote about requesting motion and orientation access on mobile devices, we can gain access to the gyroscope.

// Request permission

DeviceOrientationEvent.requestPermission()

.then(response => {

if (response == 'granted') {

// success

}

})

.catch(console.error)In order to then connect the device’s motion and orientation to the Three.js scene, we’ll need to use the depreciated but still very handy DeviceOrientationControls. I actually used patch-package to re-add it to the latest three installation. Meanwhile, mrdobb thinks maybe he should bring it back. 😅

let controls = new DeviceOrientationControls(camera)This works pretty well but there is one thing that would make our implementation even better: a compass heading. Since we’re dealing with exact orbits, it would be nice if our orientation controls took into consideration the actual cardinal direction of our user. That way satellites are truly positioned in the right direction. The DeviceOrientationControls has an alphaOffset property which allows you to offset the alpha angle based on the user’s heading. All we need to do is get that heading. Sadly the compass heading tech is a bit hit or miss and I settled on a small hack within my permission request above which waits for the first compass heading that isn’t 0 (the default.) I then use it as an offset.

// Get compass heading

let getCompassHeading = (e) => {

// If heading does not equal default

if (e.webkitCompassHeading != 0) {

// Store absolute heading

heading = Math.abs(e.webkitCompassHeading - 360) // Stop listening to device orientation

window.removeEventListener(“deviceorientation”, getCompassHeading) }

}// Listen for compass heading

window.addEventListener(“deviceorientation”, getCompassHeading)

I wasn’t able to get compass access on Android devices so I just added another screen in the user journey which suggested the user faced north before starting the exploration experience.

Location

While the compass plays one part in rotating the sky sphere in the appropriate orientation, we also need to rotate it based on the user’s location. A satellite which is exactly overhead of my location in New Orleans, should not be exactly overhead in London. To do this, we’ll ask the user permission to access their GPS using the Geolocation API.

// Get geolocation

navigator.geolocation.getCurrentPosition(data => {

// data.coords.latitude

// data.coords.longitude

})If the user refuses this permission, we can fallback to the MaxMind GeoIP technique we used in the launch process.

In the exploration Three scene, I add all of the satellites to a new THREE.Group so I can rotate all of them based on the user’s location.

// Initialize satellite group

const satellites = new THREE.Group()// Rotate satellites to above location

satellites.rotation.x = THREE.MathUtils.degToRad(-90 + coords[0])

satellites.rotation.y = THREE.MathUtils.degToRad(270 - coords[1])

One fun way to debug and test the adjusted orientation of your compass and location is to plot the sun or moon in the sky also. You can use suncalc to get those positions and then plot them in your Three scene. Hell, just plotting the sun and moon could make for a fun activation.

Anyway, here’s how I added the moon with suncalc.

// Initialize moon geometry

const moonGeometry = new THREE.SphereGeometry(1, 40, 40)// Initialize moon material

const moonMaterial = new THREE.MeshBasicMaterial({

color: 0xCCCCCC

})// Initialize moon

const moon = new THREE.Mesh(moonGeometry, moonMaterial)// Scale moon

moon.scale.setScalar(0.05)// Add moon

scene.add(moon)// Moon positioning

let positionMoon = () => {

// Get moon position from suncalc

let moonPosition = SunCalc.getMoonPosition(new Date(), coords[0], coords[1]) // Set vector3 from altitude and azimuth

let moonVector = new THREE.Vector3().setFromSphericalCoords(

this.orbitRadius,

moonPosition.altitude - THREE.MathUtils.degToRad(90),

-moonPosition.azimuth - THREE.MathUtils.degToRad(180)

)// Position moon

moon.position.copy(moonVector)}// Position moon

positionMoon()

Now, you should see a gray moon plotted in your scene also. Note, it could be under the horizon. Next, let’s get permission to access the user’s camera so we can add real-time video as the backdrop of our scene.

Camera

Getting access to the camera is very similar to accessing the microphone. In this case, we can set the microphone to false and ask for the environment facing camera. The video stream then goes to an awaiting (but mostly hidden) <video> tag and we wait for it to load, storing the video height and width for later.

// Get camera

let stream = await navigator.mediaDevices.getUserMedia({

audio: false,

video: {

facingMode: ‘environment’

}

})// Get video tag

let video = document.getElementsByTagName(‘video’)[0]// Replace video tag source

video.srcObject = stream// Video ready

video.onloadedmetadata = async () => {

// Store video size

videoHeight = video.videoHeight

videoWidth = video.videoWidth}

We can then turn the video into a THREE.VideoTexture and use it as the scene background but I first wanted to filter the video so I decided to use a THREE.CanvasTexture instead.

// Initialize video canvas

let videoCanvas = document.createElement(‘canvas’)// Resize canvas

videoCanvas.height = window.innerHeight

videoCanvas.width = window.innerWidth// Initialize video texture

const videoTexture = new THREE.CanvasTexture(videoCanvas)// Add video texture to scene background

scene.background = videoTexture

I then wrote a little function that could draw the video onto my full screen videoCanvas and make sure the video image (regardless of dimensions) was contained nicely on the screen. To accomplish this, I employed the use of the excellent intrinsic-scale library. Then, I chose to desaturate the canvas and then multiply the teal coloring of the Earthling release.

// Get video

let video = document.getElementsByTagName('video')[0]// Get video dimensions

let { videoHeight, videoWidth } = video// Get video canvas

let canvas = this.videoCanvas// Resize canvas

canvas.height = window.innerHeight

canvas.width = window.innerWidth// Get video context

let context = canvas.getContext('2d')// Clear canvas

context.clearRect(0, 0, canvas.width, canvas.height)// Calculate containment

let { width, height, x, y } = contain(videoWidth, videoHeight, canvas.width, canvas.height)// Draw video

context.drawImage(video, x, y, width, height, 0, 0, canvas.width, canvas.height)// Desaturate canvas

this.desaturate(canvas)// Add teal coloring

context.globalCompositeOperation = "multiply"

context.fillStyle = "#00A0AA"

context.fillRect(0, 0, canvas.width, canvas.height)// Reset composite operation

context.globalCompositeOperation = "source-over"

Finally, within the scene render loop, we should render the video canvas and let the scene background know it should update.

// Render video canvas

renderVideoCanvas()// Update scene background

scene.background.needsUpdate = true

Now that we have our satellites orbiting in a perfectly oriented sky over our real world backdrop, we can finally detect when a user is pointing at one.

Targeting and Playback

In order to detect when a user is pointing their device at a satellite in the sky, we’ll use a THREE.Raycaster and a THREE.Vector2 pointer placed in the middle of the viewport.

// Initialize raycaster

const raycaster = new THREE.Raycaster()// Initialize pointer as Vector2

const pointer = new THREE.Vector2()

Then, in our render loop, we’ll update our picking ray and see if it intersects any of our satellites. If it does, we can playback the associated audio.

// Update picking ray

raycaster.setFromCamera(pointer, camera)// Calculate intersections

const intersects = raycaster.intersectObjects(satellites.children)// If intersections

if (intersects.length) {

// Play associated audio

}

I’m using Howler to playback the satellite recording. I had to add a bit of logic to deal with sounds that haven’t been loaded yet and make sure a sound stops when a user stops focusing on a particular satellite. Like always, Howler gets the job done.

That concludes most of the high level programming decisions. I’d also like to briefly talk about how design was handled.

Design Process

I thought it might be interesting to share how systematic my design process can be sometimes. I will spend the bulk of a project’s time on development, so I lean very heavily on “functional design.” To me, that involves designing interfaces which are accessible, familiar, responsive, and fast. I also find it rather difficult for my brain to bounce back and forth between development and design. For this reason, I avoid adding any real aesthetic to my projects until the core proposed functionality is in. This allows the client and I to stay focused on the mechanics of the activation and not get bogged down by sometimes emotional design decisions. My design process is focused on mobile first to allow clients to understand the limited real estate there. I will later on think about how to take advantage of larger screens.

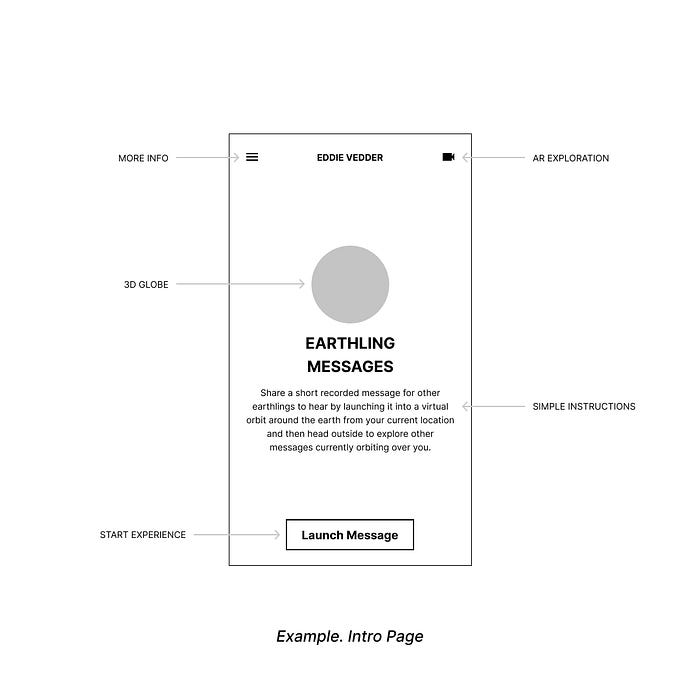

Wireframes

Once a project is approved, I will quickly send the client a wireframe of the user journey. This allows them to begin grasping what I had in mind for their users and where they can begin to add the client’s voice for copy. I keep these abstract and void of colors. They were inspired by patent diagrams and seek to only explain how the interface will work. Once this user journey is agreed upon, I will actually try to develop a functional version of it as close to this wireframe as possible. Then, once that is approved, I’ll move into adding design.

Design System

By building out the wireframes as a prototype first, I begin to understand which components make up the experience. I can then translate these components into a simple design system in Figma. Inspiration will typically come from the album packaging and I’ll begin to translate the aesthetic decisions there into the colors, typography, and iconography that makes up the app. Using the existing art as inspiration makes getting approvals much easier but I will adjust things as needed to make them more functional and accessible.

Screens

With the design system created, I can now create a new series of screens which are mostly the designed versions of the functional prototype. This session usually reveals issues between screens and I work towards bringing harmony to the overall design. I also encounter interesting revelations like how some designed components can be reused between different areas of the app. I will then use Figma’s prototyping function to wire up the screens but I will seldomly send this to the client. I’d rather they were continuing to test the functional prototype.

CSS

On the actual app, I’ve been enjoying using Tailwind to translate my design system into real components. I’ll begin this work back in the wireframe / prototyping step and even build in all my responsive breakpoints before adding in the aesthetics. I just love how powerful Tailwind can be in handling the variety of states an element might have. It lends itself really well to my process.

Thanks

Thanks to Tim Hrycyshyn, Elliott Althoff, and Alexander Coslov from Republic Records for bringing me in on this project and helping co-pilot it to completion. Thanks to Eddie’s management team for keeping the feedback brief and the enthusiasm rolling. Finally, thanks to Eddie for making music that has inspired me for literally decades. Earthing is out everywhere now. Stream or purchase it today.